Section: New Results

Events Recognition and Performance Evaluation

Participants : Ricardo Cezar Bonfim Rodrigues, François Brémond.

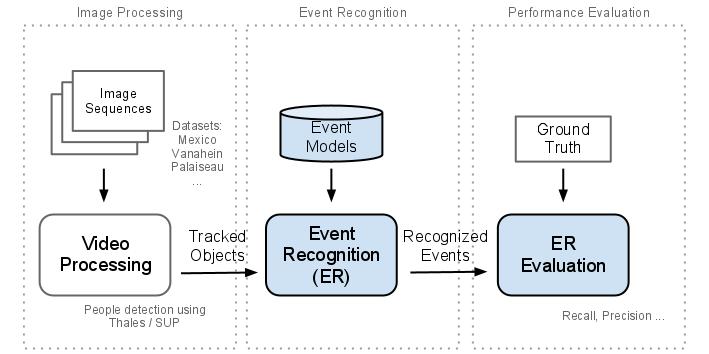

The goal of this work is to evaluate the accuracy and performance of events detection, see workflow in Figure 18 .The experiments will be performed using the tools developed in Pulsar team, such as Scene Understand Platform (SUP) (http://raweb.inria.fr/rapportsactivite/RA2010/pulsar/uid27.html ) a plugin for events detection [50] and ViseVal (http://www-sop.inria.fr/teams/pulsar/EvaluationTool/ViSEvAl_Description.html ).

The experiments were performed using video sequences of a subway station (VANAHEIN dataset) where the goal was to detect events such as people waiting, entering, buying tickets and so on. Preliminary results showed a very low accuracy and demonstrated that the scenario configuration parameters are very sensitive in this problem. It means many of the expected events were missed or misclassified, specially composite events (when more than one activity recognition is required) see some issues on Figure 19 .

|

Based on the issues, a second experiment was configured using 3 different video sequences. In this new experiment, the scenario was adjusted to give more tolerances to people detection issues, the camera calibration was refined and some events were remodeled. After these changes the results were significantly improved. This last experiments showed that the engine proposed by Pulsar team is able to detect events accurately however events modeling can be very sensitive to the scenario configuration, see the results in Table 3 .

| Sequence 1 | Sequence 2 | Sequence 2 | |

| Precision (global) | 0.73 | 1.00 | 0.88 |

| Sensitivity (global) | 0.82 | 0.90 | 0.85 |